AI-associated data breaches cost organizations more than $650,000 per incident [6]. While enterprises race to deploy AI capabilities, most lack the governance infrastructure to prove what their systems actually did when questions arise. That gap between implementation and accountability creates massive, measurable business risk.

Only 4% of organizations achieve meaningful ROI from AI initiatives, largely because shadow AI proliferation undermines controlled deployment [6]. Over 35 countries are advancing new AI regulations [6]. Transparency is no longer a nice-to-have; it is essential to business continuity.

Most AI implementations operate as black boxes. Tracing decisions back to inputs, validating reasoning paths, demonstrating compliance when regulators arrive: all impossible without the right infrastructure. Organizations that skip the audit framework face data leaks, biased decisions, and regulatory penalties that dwarf implementation costs [6].

Those embedding auditability into their AI architecture from day one move faster through regulatory approvals, carry less operational risk, and earn the stakeholder confidence that comes from demonstrable transparency [6].

This guide examines why auditable AI has become a business imperative, what it means in practice, and how to build systems that satisfy both operational requirements and regulatory demands.

Why AI Auditability Is Now a Business Priority

Enterprise AI adoption has created an organizational paradox. Technical capabilities advance at speed, but governance frameworks remain anchored in pre-AI assumptions about static systems and predictable decision paths. The mismatch between deployment velocity and oversight capability demands immediate architectural attention.

AI Adoption Is Outpacing Governance

Only 30% of organizations surveyed have moved beyond experimentation to deploy AI systems in production, and just 13% manage multiple deployments [1]. Even at that modest adoption level, nearly half (48%) of companies fail to monitor production AI systems for accuracy, drift, or misuse [1]. These are basic governance practices, and they are absent from nearly half of all production deployments.

The challenge extends well beyond official rollouts. Half of the U.S. workforce reports using AI tools at work without knowing whether it is allowed, while 44% knowingly use AI improperly [1]. Forty-six percent of employees admit to uploading sensitive company information and intellectual property to public AI platforms [1].

Traditional security and compliance controls were not designed for AI systems that learn and adapt in real time [20]. Speed-to-market pressure compounds the problem: 45% of respondents cite it as the primary obstacle to governance [1]. Most companies treat governance as a compliance formality rather than a core operational capability [1].

Regulators Demand Explainability and Traceability

The European Union AI Act is the first binding legal framework on AI, introducing a risk-based approach that categorizes systems by impact level [6]. Many enterprise AI applications fall into the “high risk” category, carrying strict obligations for transparency, governance, explainability, and regulatory oversight [6]. Non-compliance penalties reach €35 million or 7% of global annual turnover [6]. U.S. states are moving in the same direction: at least 25 have introduced bills addressing AI concerns, with 18 signing them into law [6].

Organizational readiness lags well behind. Only 14% of companies report familiarity with major standards such as the NIST AI Risk Management Framework [1]. Meanwhile, 72% of U.S. consumers believe more regulation is needed for AI safety, and only 29% feel current regulations are sufficient [20]. The regulatory environment is tightening, and most enterprises are not prepared for it.

Boards and CxOs Need Defensible AI Systems

Board-level AI governance reveals a striking disconnect between deployment and oversight. More than 88% of organizations report using AI in at least one business function, yet only 39% of Fortune 100 companies have disclosed any form of board oversight of AI [6]. Two-thirds of directors report their boards have “limited to no knowledge or experience” with AI, and nearly one in three say AI does not appear on their agendas [6].

This oversight gap carries measurable costs. Organizations with digitally and AI-savvy boards outperform peers by 10.9 percentage points in return on equity, while those without fall 3.8 percent below industry average [6].

Examining policy versus practice makes the coordination challenge concrete. While 75% of organizations report having AI usage policies, fewer than 60% have dedicated governance roles or incident response playbooks [1]. Governance remains a checkbox exercise at most enterprises. The organizations succeeding with AI integrate monitoring, risk evaluation, and incident response directly into engineering pipelines, and they build automated checks that prevent deployment of under-tested models [1]. For these companies, governance is a performance enabler, not an overhead cost.

What Is Auditable AI?

Most AI systems operate as decision-making black boxes where internal reasoning remains invisible to stakeholders. Auditable AI solves this by building systems whose decisions can be reconstructed, inspected, and justified after the fact. Unlike conventional software with explicit logic paths, AI models require specialized infrastructure to make their decision processes visible and defensible.

Four capabilities work together to make this possible.

Traceability: Linking Decisions to Inputs and Models

Traceability creates an unbroken chain from AI outputs back to their original inputs and processing steps. In practice, it works by documenting each stage of the decision-making process, from data input to final output, for every AI initiative [7].

Consider a loan application denial. With traceability, a loan officer can identify exactly which risk factors and weightings influenced that specific decision [2]. Without it, finding the source of errors or biases is nearly impossible, and the organization is left exposed to both operational failures and regulatory challenges.

Explainability: Making Decisions Understandable

Explainability translates complex AI processes into terms that humans can act on. Where traceability provides the technical audit trail, explainability addresses communication: why did the system reach this particular decision, recommendation, or prediction [2]?

Different stakeholders require different depths. A customer denied a loan needs straightforward reasons. A technical team needs granular details about feature importance to validate model patterns [2]. Risk management needs explanations focused on bias detection and compliance verification. Effective explainability serves the right level of detail to each audience, and the system should be designed to deliver all three from the same underlying data.

Provenance: Tracking Data and Model Versions

Data provenance maintains detailed records of data origins and transformation history throughout the AI lifecycle: who created the data, what modifications were made, and the identity of those who made them [3]. Provenance tracking covers model versions and which updates were applied [8], data lineage from creation through transformations to current state [3], and metadata such as timestamps and source identifiers [9]. Without these records, organizations cannot verify that their AI systems are making decisions based on reliable, uncompromised information. The trustworthiness of every AI output depends on the integrity of the data behind it.

Policy Alignment: Mapping Decisions to Rules

Policy alignment demonstrates that AI systems operate within defined parameters and comply with both internal standards and external regulations. For regulated industries, this means mapping specific decisions to applicable laws. The EU’s General Data Protection Regulation (GDPR), for instance, includes a “right to explanation,” meaning individuals can request the logic behind automated decisions affecting them [10].

Effective policy alignment requires detailed documentation: technical specifications, usage instructions, dataset information, and incident reports [11]. This documentation enables organizations to verify adherence to enterprise values and regulatory norms, and it gives stakeholders and regulators a basis for trust.

These four dimensions reinforce each other. Traceability without explainability produces logs nobody can interpret. Explainability without provenance produces explanations that cannot be verified. Together, they turn opaque AI systems into infrastructure that organizations can defend under scrutiny.

Why Most AI Systems Today Are Not Auditable

The gulf between AI deployment and transparency reflects fundamental architectural choices that prioritize speed over accountability. Most enterprise AI implementations lack the infrastructure to reconstruct decision pathways, and the blind spots appear exactly where visibility matters most.

Black-Box APIs and Missing Reasoning Logs

Deep learning models operate through high-dimensional representations that resist interpretation even by the experts who built them [12]. This opacity comes from the mathematical architecture of neural networks themselves. When a model processes inputs through millions of parameters, the resulting decision pathways are mathematically dense rather than logically transparent.

API-based implementations compound the problem. Reasoning tokens, the internal “thoughts” that could illuminate model behavior, remain inconsistently available across providers. Some models expose reasoning processes through unified interfaces; others, such as OpenAI’s o-series, provide no access to internal reasoning steps at all [13]. Many organizations deploy these systems without configuring reasoning token access, unknowingly eliminating their primary transparency mechanism.

Without reasoning logs, validating model logic is impossible.

Fragmented Tools and Lack of Orchestration

Enterprise AI adoption typically follows departmental proliferation, with individual teams selecting tools based on immediate needs and no coordination across the organization [14]. Multiple AI subscriptions generate redundant costs and security gaps. Isolated solutions cannot share learnings or maintain consistent standards. Disconnected implementations prevent unified compliance frameworks. And innovation stalls when teams rebuild capabilities that others have already developed.

Organizations juggling multiple AI vendors discover that each provider optimizes for its own ecosystem, with business outcomes treated as a secondary concern [4]. Point solutions accumulate without producing coordinated intelligence, and the resulting technical debt constrains future AI initiatives.

Shadow AI and Unapproved Tools

Ninety-eight percent of employees use unsanctioned applications across shadow AI and traditional IT environments [5].

The data exposure risks are immediate. Forty-six percent of employees upload sensitive information and intellectual property to public AI platforms [5]. Beyond data leakage, shadow AI eliminates visibility into AI-driven decisions, security monitoring, and cost management [15]. Organizations cannot audit systems they do not know exist. Traditional blocking mechanisms prove insufficient because employees route around restrictions through personal devices and alternative access methods, and the sheer proliferation of AI tools makes prevention impractical [5]. Productivity benefits ensure continued unauthorized usage regardless of policy.

Innervation’s Approach to Auditable AI

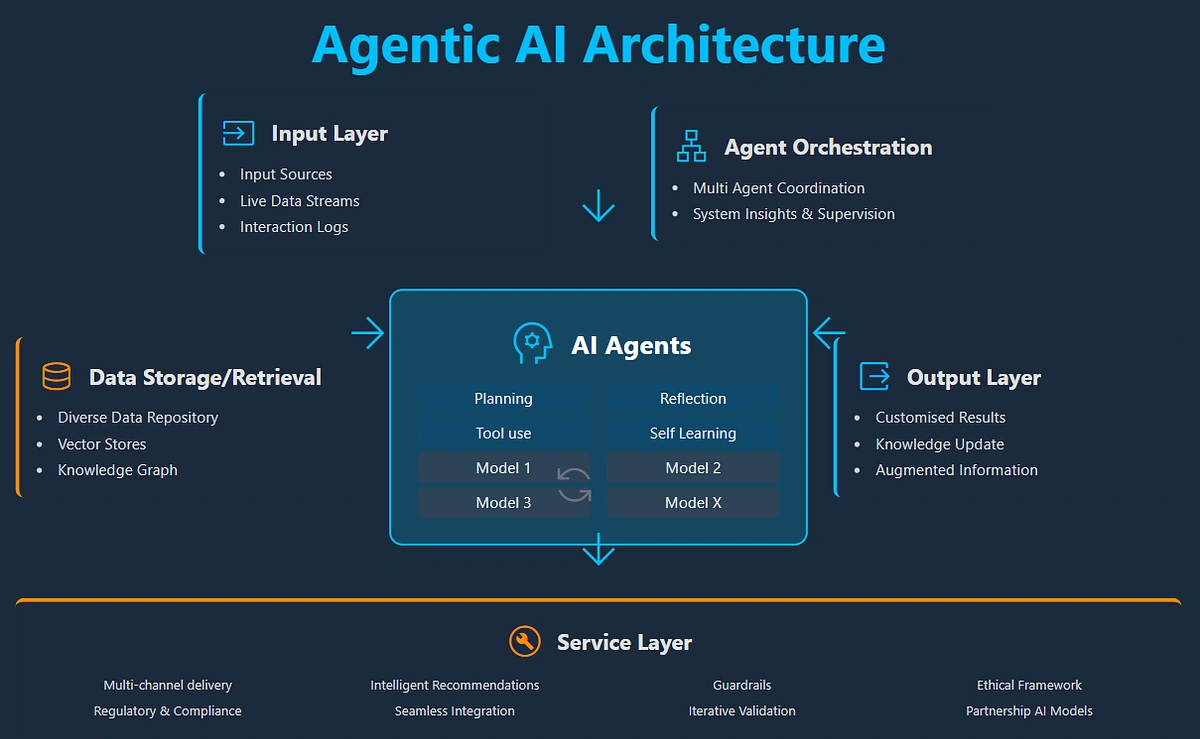

Innervation does not compete with audit solutions. We provide the orchestration infrastructure that makes auditability possible in the first place.

Our platform embeds transparency directly into multi-agent coordination architecture. Where typical AI deployments bolt on monitoring as an afterthought, Innervation treats observability as an architectural requirement. Every agent interaction, reasoning step, and decision path becomes fully auditable by design.

Auditability at the Orchestration Layer

Most audit approaches monitor individual models and miss the complex interactions that drive real business intelligence. Innervation positions auditability at the orchestration layer: the central nervous system coordinating all AI components. This captures what each agent decided, how specialized agents collaborated and validated each other’s work, and how they synthesized final outputs.

The approach mirrors how expert teams naturally operate. Each agent focuses on its domain while meta-orchestrators ensure coordination transparency. Unlike fragmented point solutions, this produces full audit trails across entire AI workflows.

Complete Observability of Agent Interactions

The platform records inter-agent communications, tool calls, intermediate reasoning steps, and state transitions throughout complex workflows. Research agents gather and validate data sources. Analysis agents evaluate findings. Synthesis agents integrate insights. Every one of these interactions becomes part of the permanent audit record.

When regulators or stakeholders ask “how did you reach this conclusion,” you have complete documentation of the collaborative intelligence that produced the result. This level of instrumentation turns previously invisible AI reasoning into transparent, reconstructable decision pathways.

Mathematical Coordination Guarantees

Petri-Net based coordination architecture provides formal guarantees for deadlock prevention, race condition elimination, and execution completeness. This mathematical underpinning makes it possible to distinguish “what should have happened” from “what actually happened.” The result is definitive audit trails where approximate monitoring would fall short. Graph-based workflows ensure reliable task completion and predictable execution; single-agent architectures cannot offer equivalent coordination guarantees.

Model-Agnostic Infrastructure Design

The platform maintains complete vendor neutrality. Organizations can swap underlying models, integrate new capabilities, or adapt to changing requirements without disrupting their audit framework. OpenAI, Anthropic, Google, open-source alternatives: Innervation orchestrates them all while maintaining full transparency.

System-agnostic design also means the platform integrates with existing enterprise infrastructure without requiring wholesale replacement. Organizations focus their resources on building solutions; Innervation handles the complexity of auditable multi-agent coordination. And because the coordination architecture is decoupled from any single model provider, the governance infrastructure you invest in today continues to serve you as models evolve and pricing shifts beneath it.

See how Innervation embeds auditability into multi-agent orchestration from day one, giving your organization complete decision traceability.

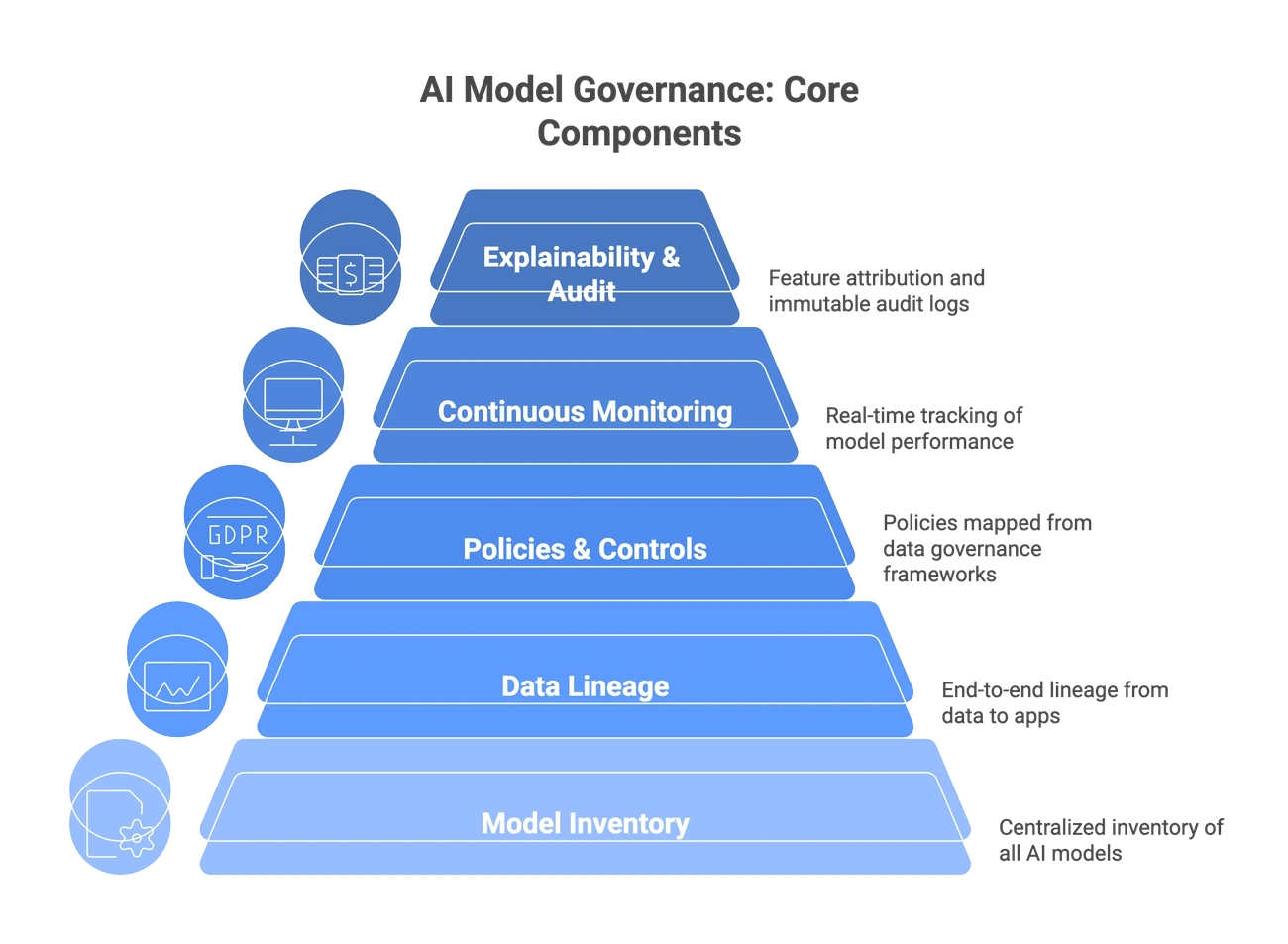

Architecture of an Auditable AI System

Building auditable AI requires embedding transparency into the architecture from the start. The challenge is designing systems where every component contributes to a unified audit fabric.

Instrumentation Layer: Logging All Steps

The instrumentation layer functions as the audit backbone. It captures telemetry data across metrics, events, logs, and traces throughout system execution: chronological records of every action, user-AI interaction, prompt, response, tool call, and decision path, each with millisecond precision timestamps.

Most implementations fail at this layer because they instrument outputs without capturing intermediate reasoning steps. Effective instrumentation documents what happened and how the system arrived at each decision point. The goal is an unbroken chain from inputs to final outputs.

Governance Layer: Policy Tagging and Enforcement

Policy enforcement operates through active tagging mechanisms that map AI behaviors to compliance requirements in real time. The governance layer maintains version histories of all components while automatically generating compliance evidence for regulatory frameworks. Unlike passive monitoring, this layer enforces constraints during execution, catching violations before they propagate. Each decision receives tags for applicable rules, and non-compliant operations are blocked from proceeding.

Observability and Replay for Audits

Observability infrastructure transforms collected telemetry into actionable audit trails through correlation engines that connect information across different data types. Teams can pinpoint exact failure points when issues arise and reconstruct complete decision paths for regulatory review.

The replay capability is what makes forensic analysis practical. Auditors can step through decision logic as if observing the original process, which proves essential when demonstrating compliance or investigating unexpected behaviors.

Separation of Concerns for Model Flexibility

Modular architecture principles ensure each component maintains distinct responsibilities within the audit framework. The coordination infrastructure remains stable while underlying models evolve, which means audit systems operate independently of specific model implementations. Organizations can swap models, integrate new capabilities, or modify reasoning approaches while preserving their entire investment in governance and compliance. The infrastructure endures even as the models beneath it change.

Conclusion

Organizations that can prove what their AI systems did will separate themselves from those that merely hope their systems performed correctly. The gap between AI implementation and accountability has reached a tipping point. Auditability determines whether your AI initiatives grow or stall.

Most organizations scramble to add transparency after the fact, but the ones building observability, traceability, and policy alignment into their AI coordination architecture from day one create defensible systems that satisfy regulators, boards, and customers. Model-agnostic platforms that capture agent interactions, tool calls, and reasoning steps across complex workflows provide the architectural bedrock for this approach, keeping your governance infrastructure stable even as the models beneath it evolve. The architectural choices made this year will determine how much freedom or constraint your AI programs have for the next five.

Enterprise leaders face a straightforward decision: invest in auditable AI systems now, or spend the next several years explaining to stakeholders why your AI programs cannot demonstrate compliance or justify their decisions. The organizations choosing transparency are already seeing returns in faster deployment cycles, stronger stakeholder confidence, and the operational intelligence you only get from systems you can actually trust.

Ready to build AI systems your stakeholders can trust? Let’s discuss how Innervation’s auditable orchestration platform fits your governance requirements.

Key Takeaways

- AI governance gaps create massive business risk – Only 30% of organizations have moved beyond AI experimentation, while 48% fail to monitor production systems for accuracy or misuse.

- Regulatory compliance is now mandatory – The EU AI Act and similar regulations impose penalties up to €35 million or 7% of global revenue for non-compliant AI systems.

- Shadow AI threatens organizational security – 98% of employees use unauthorized AI tools, with 46% uploading sensitive company data to public AI platforms without approval.

- Auditable AI rests on four pillars – Traceability (linking decisions to inputs), explainability (making decisions understandable), provenance (tracking data versions), and policy alignment (mapping to rules).

- Embed auditability at the coordination layer from the start – Gain visibility across all AI components and agents, not just individual models.

Frequently Asked Questions

The EU AI Act is in force, U.S. states are advancing their own legislation, and boards are increasingly expected to demonstrate oversight of AI systems. Enterprises without auditable AI face penalties, operational risk, and erosion of stakeholder trust. Auditability is the mechanism by which organizations prove how their systems make decisions.

Traceability links outputs to inputs. Explainability translates AI reasoning for human audiences. Provenance documents data origins and model versions. Policy alignment maps decisions to regulatory and internal rules. Each addresses a different dimension of accountability, and they reinforce each other: traceability without explainability produces logs nobody can read, while explainability without provenance produces explanations nobody can verify.

98% of employees use unsanctioned applications. 46% upload sensitive company information to public AI platforms. Traditional blocking mechanisms cannot keep pace.

Positioning auditability at the orchestration layer, the coordination point connecting all AI components, provides the broadest visibility. This design captures interactions across multi-agent systems including inter-agent communications, tool calls, and reasoning steps. Single-model monitoring misses the agent-to-agent dynamics where most enterprise AI decisions actually take shape.

Organizations with auditable AI systems move through regulatory approvals faster and reduce exposure to operational incidents. Boards with AI-savvy governance outperform peers by 10.9 percentage points in return on equity [6]. Beyond the numbers, transparency unlocks deployment speed: when you can prove your system works as intended, you spend less time in review cycles and more time in production.

References

- ISACA – The Rise of Shadow AI

- Transcend – AI Governance Auditing

- Magic Mirror – AI Transparency Framework

- CIO – AI Governance Gaps

- KPMG – Trust in AI 2025

- CIO Dive – The AI Governance Gap

- Latinia – The EU AI Act Is Now in Force

- Hyperight – Explainability in AI Regulatory Frameworks

- McKinsey – The AI Reckoning: How Boards Can Evolve

- Unframe – What Is AI Auditability

- McKinsey – Why Businesses Need Explainable AI

- IBM – Data Provenance

- Fisher Phillips – AI Governance 101

- Kigen – Data Provenance: Enhancing AI Authenticity

- Congruity360 – Explainable AI

- Partnership on AI – Policy Alignment on AI Transparency

- Palo Alto Networks – Black Box AI

- Blackbox AI – Reasoning API Reference

- Tungsten Automation – AI Fragmentation

- InformationWeek – The AI Orchestration Gap

- Varonis – Shadow AI

- Zylo – Shadow AI